Art of the State

The in-development hocc parser

generator recently reached the significant

milestone of being capable of generating state tables using any of the LR(1), PGM(1), or LALR(1)

algorithms. All of these algorithms date back to the 1960s and 1970s, but there’s a newcomer,

IELR(1), which was published this century. This seemed like a good time to tie off a loose end and

write a detailed critique of IELR(1) and show that it does not advance the state of the

art. But as I prepared that critique I became

convinced that IELR(1) is the state of the art. There is an art of the state (machine), and

IELR(1) has it in spades. Read on for a shared glimpse of its merits.

LR(1), PGM(1), LALR(1)

hocc can currently generate parsing tables for three parser algorithms, all in the

LR family.

- LR(1): The “Left to right Rightmost recursive” algorithm [1], sometimes referred to as “Canonical” LR(1), is the most general LR(1) algorithm. Unfortunately, the parser tables tend to be extremely bloated with unnecessary near-identical states. This bloat precluded real-world use, at first because computers weren’t powerful enough to cope, and later because more svelte (and more limited) algorithms stole its thunder.

- PGM(1): The “Practical General Method” [2] algorithm remained almost unknown for decades, until the Menhir parser generator implemented it [3]. PGM(1) merges near-identical states without creating any parsing conflicts, unless there would have been conflicts even for an LR(1) parser. Until last week PGM(1) was to my way of thinking unbeatable in terms of both generality and practicality.

- LALR(1): The “Look-Ahead” LR(1) algorithm [4] merges all near-identical states, even if doing so introduces new reduce-reduce conflicts. It is possible to specify grammars that do not induce any such conflicts, but it requires a nuanced understanding of the algorithm to distinguish LALR(1)-induced conflicts from those inherent to the grammar. Furthermore, “best practice” has long been to develop grammars with some fixed number of known conflicts which are de facto resolved by the parser generator, yet during grammar development it is entirely possible for harmless conflicts to be replaced by harmful ones. Unless the developer is extremely careful bad things can, and do, happen.

I implemented all three algorithms in hocc primarily as a grammar analysis aid; in practice my

intent was to only develop actual parsers with PGM(1), with the expectation that they would always

be functionally identical to LR(1) parsers but much faster/smaller, and that often the parsers would

happen to be functionally identical to LALR(1) parsers. But it turns out that PGM(1) parsers

sometimes differ from LR(1) parsers!

A troublesome grammar

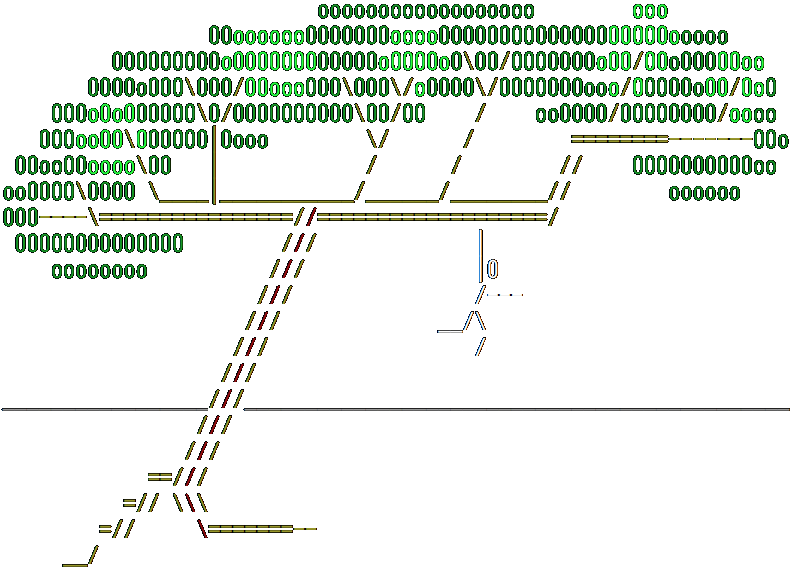

Consider the following grammar, adapted from Figure 1 of the IELR(1) paper [5]:

hocc

left p

token Ta prec p

token Tb

start S ::=

| Ta A Ta prec p

| Tb A Tb

nonterm A prec p ::=

| Ta

| Ta Ta

LR(1)

The grammar contains an ambiguity that makes it non-LR(1), but the left-associative precedence is sufficient for resolving the ambiguity. Here is the ambiguous state that results [6], absent the precedence (full report):

State 4

Kernel

[A ::= Ta ·, {Ta}]

[A ::= Ta · Ta, {Ta}]

Actions

Ta :

CONFLICT ShiftPrefix 9

CONFLICT Reduce A ::= Ta

And here’s how it resolves in the context of the LR(1) algorithm (full report):

State 4

Kernel

[A ::= Ta ·, {Ta}] prec p

[A ::= Ta · Ta, {Ta}] prec p

Actions

Ta : Reduce A ::= Ta prec p

PGM(1)

Here’s where things get interesting. Using the PGM(1) algorithm, we get a different conflict (full report):

State 4

Kernel

[A ::= Ta ·, {Ta, Tb}]

[A ::= Ta · Ta, {Ta, Tb}]

Actions

Ta :

CONFLICT ShiftPrefix 8

CONFLICT Reduce A ::= Ta

Tb : Reduce A ::= Ta

And conflict resolution deals a killing blow (full report):

State 4

Kernel

[A ::= Ta ·, {Ta, Tb}] prec p

[A ::= Ta · Ta, {Ta, Tb}] prec p

Actions

Ta : Reduce A ::= Ta prec p

Tb : Reduce A ::= Ta prec p

Why does this happen? Take a close look at the follow

sets and you’ll see that the LR(1) kernel items only

contain Ta in their follow sets, whereas the PGM(1) kernel item follow sets additionally contain

Tb. The PGM(1) state is actually the merge of two LR(1) states. PGM(1) guarantees no additional

conflicts where none exist in the LR(1) states, but it does not guarantee identical conflicts.

For this example the result is a badly broken parser.

How much does this matter in practice? I’m not sure. It so happens that the large and complex Lyken

grammar [7] generates identical parsers with the PGM(1) and LALR(1) algorithms, which

suggests that I adopted grammar specification practices which don’t even trip on LALR(1)

inadequacies, let alone those of PGM(1). But the IELR(1) paper reports uncovering long-undiscovered

gawk and gpic (part of

groff) parser bugs. I think it likely that those parsers

did not explicitly resolve all conflicts, which makes it much easier for grammar flaws to go

undetected, but I have not investigated further.

IELR(1)

The PGM(1) algorithm optimistically merges near-identical states, and it always gets things right as long as there are no conflicts in the specification. In contrast the IELR(1) algorithm starts off merging all near-identical states, conflicts be damned (i.e. LALR(1)), then it analyzes all the resulting conflicts to determine which are induced by merging in order to inform re-generation which avoids all troublesome merges.

The PGM(1) paper [2] is somewhat intimidating in its extreme brevity, whereas the IELR(1)

paper [5] is somewhat intimidating in its extreme thoroughness. And the IELR(1) algorithm

is certainly more complicated due to its two-pass nature. Nonetheless, the concept is

straightforward, and given the proper foundation, there are only a few auxiliary data structures

required to perform the analysis. It appears that the bison parser

generator has remained the sole implementation of IELR(1) for

over a decade now, but I hope to report back soon about hocc becoming the second implementation.

Citations/footnotes

-

Donald E. Knuth, “On the Translation of Languages from Left to Right”, Information and Control 8:607-639, 1965. ↩

-

David Pager, “A Practical General Method for Constructing LR(k) Parsers”, Acta Informatica 7:249-268, 1977. ↩ ↩2

-

Menhir initially exposed me to the PGM(1) algorithm, after which I implemented it in the Python

Parsingparser generator. ↩ -

Frank DeRemer, “Practical Translators for LR(k) languages”, Ph.D Dissertation, Department of Electrical Engineering, Massachusetts Institute of Technology, Cambridge, 1969. ↩

-

Joel E. Denny and Brian A. Malloy, “The IELR(1) algorithm for generating minimal LR(1) parser tables for non-LR(1) grammars with conflict resolution”, Science of Computer Programming, 75(11):943-979, 2010. ↩ ↩2

-

All in-line state descriptions were generated via

hocc’s text report generation facility, e.g.hocc -s IelrFig1_prec -txt. The linked full reports were generated viahocc’s html report generation facility. ↩ -

Lyken was an uncompleted programming language that incubated Parsing. ↩