Parsing Retrospective

Hemlock needs a parser, and since it will use LR(1)

parsing, that means using a parser generator. Hemlock

will be self-hosting, which precludes using an existing parser generator, ergo the creation of

hocc. Fortunately I’ve already

reinvented the wheel once (that’s the way I

roll) in the form of the Python-based

Parsing module, and it’s a lot easier to copy my own work

than that of others. Joking aside, the Parsing module synthesizes the state of the art in parser

generation as of fifteen years ago, and it has one still-distinctive feature that makes developing

reliable parsers much more tractable. This post is a Parsing retrospective that serves as

background information for hocc development.

Before Parsing

A long series of projects led to Parsing, and in retrospect some of those projects were overly

ambitious. But hey, I learned a lot and some of the software ended up being broadly useful.

- As an undergraduate computer science student I was assigned a senior group project that required generating PostScript, a postfix printer language which is the spiritual ancestor of the Portable Document Format (PDF). I had already become enamored with postfix languages thanks to my trusty HP 48GX calculator. Add to that a growing fascination for embeddable languages like Tcl, and a few years later I developed the Onyx programming language.

- As a graduate student developing the Crux bioinformatics software package, I needed flexible software for gluing together experimental pipelines. Onyx was the obvious choice because hey, I wrote it! But if you’ve ever tried to write large systems in a stack-based language, you probably know what went wrong — stack management bugs caused non-local failures. Enter Python. All was well… for a while.

- Adding a feature to the Python version of Crux was a day of bliss, followed by a week or two of soul-crushing conversion to a natively compiled module written in C. The problem I kept running into was that although Python was elegant for my purposes, it was way too slow for real-world dataset sizes, like, two orders of magnitude too slow.

-

After the umpteenth rewrite of a Python module in C, I snapped and decided to write a gradually typed language called Lyken. I never completed Lyken, however some pieces of Lyken technology survive.

-

jemalloc started out as Lyken’s memory allocator. It’s funny how poor a fit the allocator was for Lyken’s actual needs, but those ~400 hours of potentially wasted effort were salvaged thanks to several thousand more hours of work, initially by myself, and over time by many others.

-

Parsing, the subject of this post.

-

Enter Parsing

Let’s back up just a tiny bit. I didn’t just start writing a parser generator, because that’s capital-H Hard (TM). I used the most excellent Lemon parser generator. I remember Lemon fondly for a few reasons:

- Production parameters are named rather than numbered. This seems like a small detail, but it’s infuriating to track down a bug that was simply due to slightly changing a production and failing to manually renumber every parameter reference within the reduction code.

- The diagnostic output is excellent, a huge help when diagnosing grammar flaws. This strongly

influenced the level of detail in the log files generated by

Parsing. - Parsers were reentrant by default, and integrating with a hand-written scanner was straightforward.

Why did I leave Lemon behind? Well, the bootstrap Lyken implementation was primarily written in Python, and marshaling the C-based parsing results into Python data structures was both brittle and slow. Once the Lyken grammar reached a few hundred productions the overhead became untenable. Next steps:

- Dust off the (red) Dragon Book.

- ???

- Profit!

That was the idea, anyway. I wrote several blog posts during this adventure, so I’ll avoid repeating myself (much) and just add some retrospective commentary here.

- Deprogramming the LALR(1) bias: I went through the process of implementing SLR(1) and then canonical LR(1) parser generation, and I couldn’t figure out why the Dragon Book promoted LALR(1). The reader comments were absolute gold, and they incidentally saved me from failure [1]. This is how I learned about Menhir and the then-obscure Pager algorithm [2].

- Parser generation algorithms of a bygone era: In addition to summarizing parser generator algorithms, this post discussed some of the GLR parsing algorithms I was working with. That proved to be a dead end, a negative result to perhaps expound on another time.

- More forgotten parser generation

algorithms: Modern

parser generators use precedence to resolve ambiguities, but in my considered opinion, only

Parsingprovides the precision and power one should expect. I have a lot more to say on this topic, which will have to wait for another time. - Parsing.py parser generator is now available

- A simple Parsing-based parser example

- Why the overwhelming

silence?: At the time I wrote

Parsingthere weren’t many options for robust parsing in Python, and I naïvely imagined that it would be broadly adopted. Alas, it was not to be. The reader comments were a good lesson on marketing.

Current Parsing

Parsing lives on in EdgeDB, and the developers continue to

maintain it as a discrete repository that is available as a

PyPI package.

It bears mentioning only for completeness that I continued to develop Parsing for my own needs in

Crux, and thereafter in Lyken (unreleased). Parser generation

time was an ongoing issue for Lyken, and I eventually resorted to using

Cython to gain a ~10X speedup. However, Cython continued to evolve, and

version 0.11.3 (yes, all the way back in 2009) is the

last that works with this fork of Parsing.

Citations/footnotes

-

In the comments you will see that prior to implementing the Pager algorithm I was terminating multi-day parser generation attempts. Nearly two years after I posted, Paulo Barreto came along and determined that I must have done something wrong. He was right. Prior to adopting Pager’s algorithm I was generating states in depth-first order, without any memoization mechanism that would have avoided identical states. This allowed an unnecessary combinatorial explosion of states.

How did I miss such a basic problem? Well, this was over 15 years ago, so I’m not sure, though I do recall rationalizing the terrible performance as an outcome of exponential time and space complexity. I suspect I didn’t have a clear understanding of the tractable algorithmic complexity of LR(1), versus the potentially intractable algorithmic complexity of LR(k).

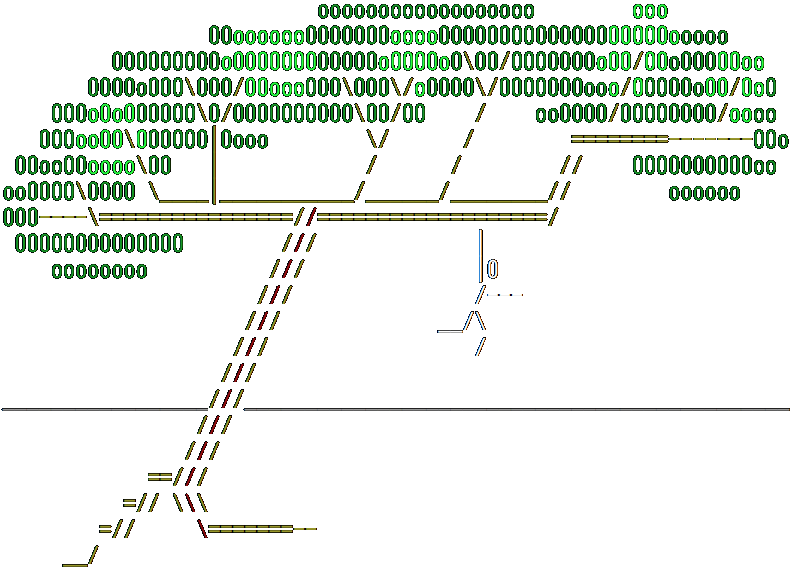

Let’s put some numbers to how things might have gone absent my canonical LR(1) implementation mistake. The last time I worked on Lyken was in 2013, but with a bit of effort I managed to get its pieces compiling. The last version of its grammar comprised 98 tokens, 128 non-terminals, and 692 productions. The

Parsingmodule generates a parser with 1374 states, and with minor modification it generates a canonical LR(1) parser with 19589 states. Ouch, that’s over 14 times as many states, and it takes ~10 times as long to generate, but I probably would have just dealt with that, perhaps spent some time optimizing the code to make it fast enough to be tolerable. Oh well, it was a rough ride, but in retrospect I’m glad to have taken this twisty path to enlightenment. ↩ -

David Pager, “A practical general method for constructing LR(k) parsers”, Acta Informatica, 7:249–268, 1977. ↩