Work Stealing - Intro

Hemlock has plenty of killer features (safety, deterministic GC, C-like speed, …), but work

stealing is probably the most killer of them all. Our major goal with Hemlock is to eliminate the

complexity of parallelization of pure computation. We want it to be completely automatic. It’s not

enough to make better abstractions (e.g.

folly/executors,

coroutines with async/await,

multiprocessing) to make it easier to

manually express parallelization of pure computation. With intentional care in language design and

implementation, it is possible to parallelize pure computation automatically and intelligently

without such abstractions.

I recently started re-reading Hennessy and Patterson’s “Computer Architecture” and a call-out in the book’s introduction helps to express what Hemlock’s work stealing actually changes about the way we do computing.

Whereas the compiler and hardware conspire to exploit [instruction-level parallelism (ILP)] implicitly without the programmer’s attention, [data-level parallelism (DLP)], [thread-level parallelism (TLP)], and [request-level parallelism (RLP)] are explicitly parallel, requiring the restructuring of the application so that in can exploit explicit parallelism. In some instances, this is easy; in many, it is a major new burden for programmers. [1]

I’ve spent most of my career taking simple problems and implementing complex solutions. Mostly, I built things for speed, and for speed I needed parallelism. I think extracting DLP performance improvements in my own work has been the biggest sink of my mental resources, and the biggest source of my bugs. It’s not entirely a complaint. Exploiting DLP is a lot of fun! Ultimately, though, it’s a drain on our (programmers’) ability to get things done quickly and correctly. That’s why we’re creating Hemlock. In Hemlock, DLP exploitation will be solved by the compiler. Programmers will not need to restructure their code to benefit.

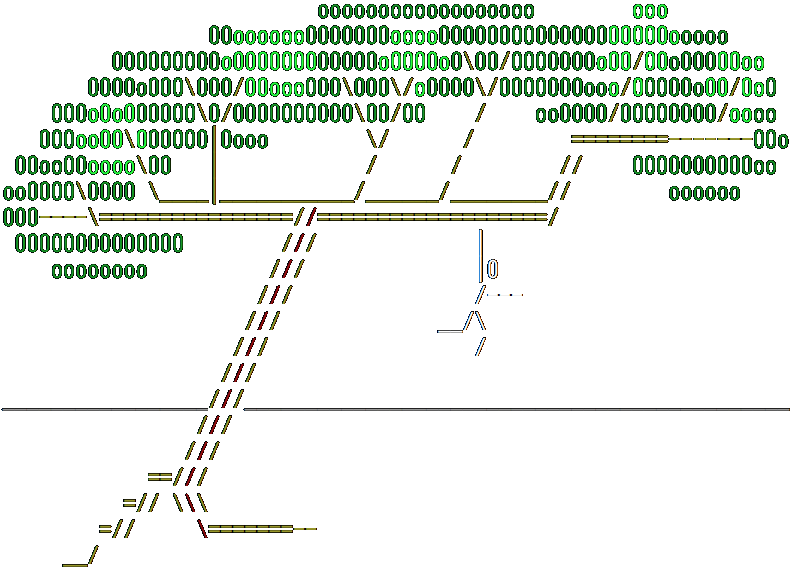

# Recursively evaluate smaller subdivisions of an array of

# unsigned ints, summing results of subdivisions together

# as recursion stack unwinds.

# val sum: _&array uns -> uns

let sum vals =

# Application of `f` is a closure with an `ld` effect,

# reading from the outer function's `vals`. It's safe

# to parallelize.

# val f: uns -> uns >(&array uns)-> uns

let rec f i j =

match j - i with

| 0 -> 0

| 1 -> Array.get i vals

| _ ->

let mid = (i + j) / 2

# The next two statements can be computed in

# parallel. While we recurse into the first

# call, the second call is stealable.

let ltotal = f i mid

let rtotal = f mid j

ltotal + rtotal

f 0 (Array.length vals)

# Generate an array of n random unsigned ints. Generating

# random values has an `os` effect.

# val gen_random_vals ^m: uns >os-> ^m&array uns

let gen_random_vals n = ...

# All three of the following sum statements can be computed

# in parallel. Furthermore, each level of recursion within

# each statement has a parallelizable recursive call. If

# there's enough work to do and we have idle CPUs, it's

# worth doing. There are a LOT of work stealing

# opportunities in here.

let x = sum (gen_random_vals 1_000)

let y = sum (gen_random_vals 1_000_000)

let z = sum (gen_random_vals 1_000_000_000)

[2]

In any other language, I’d expect an equivalent snippet to run on a single thread. If I were to choose to manually transform this function to extract a bit of parallelism, I’d spend a lot of mental effort figuring out how to properly segment the work. I might find that I almost never use the function on a large array, so it’s best to avoid splitting the work at all in most cases. Or, even if I have large enough arrays to make it worth splitting, perhaps it’s not worth splitting amongst all available sixty-four cores. Additionally, if I have to manually express how to split inputs and join results, or require mutual exclusion, I’m pretty much guaranteed to write a few bugs along the way. In the end, I think I’d spend at least ten times the effort parallelizing this function than I did just writing the basic implementation.

In Hemlock, I won’t waste time with any of that. I expect the runtime executors to choose better parallelization opportunities via work stealing than I would have chosen via manually transforming the code. This is the killer feature I want.

In this blog series

Jason and I finished our design for work stealing this summer. There’s plenty of material to nerd out about. I’m going to cover it over a series of blog posts.

- Type system - Codifying stealability with

mutabilityandeffect - Actors - Making work stealable from an actor’s execution stack

- Executors - Choosing the best work to steal

- Determinism - Making speed the only side-effect of work stealing

Citations & footnotes

-

Hennessy, John L., and David A. Patterson. Computer Architecture: A Quantative Approach. Sixth ed., Morgan Kaufmann Publishers, Cambridge, MA, 2019, p. 5 ↩

-

Array.reducein Hemlock’s standard library makes mysumfunction superfluous.let x = Array.reduce Int.( + ) (gen_random_vals 1_000) let y = Array.reduce Int.( + ) (gen_random_vals 1_000_000) let z = Array.reduce Int.( + ) (gen_random_vals 1_000_000_000)